-

13 Jan 2023

13 Jan 2023-

Recently I wrote a little Ruby command line tool and I wanted to package it for easy installation (and removal) on Debian based systems. One of the challenges I encountered during the packaging process was that my command has a dependency on the

gepublibrary, which at the time, did not have adebpackage available, at least not in the official source. This meant that I also had to create a Debian package for thegepublibrary before I could proceed with the original packaging. This article will go through the steps I took to overcome this challenge and provide a guide for anyone looking to package Ruby gems as Debian packages. -

Configure the environment

A lot of Debian packaging tools recognize input through specific environment variables. To let the templating engine for the package sources know how you are and tell the package builder not to sign the packages cryptographically (only in case you don’t indend to distribute them to a larger audience) you can set the environment like this:

export DEBFULLNAME="Alexander E. Fischer" export DEBEMAIL=aef@example.net export DEBBUILDOPTS="-us -uc"

Gather dependencies

Because

gepubdepends on the Ruby gemsnokogiriandrubyzipand we don’t want our Debian packages to depend on the Rubygems package system you will need to install theruby-zipandruby-nokogiriDebian packages through APT. You could use the following command:apt install ruby-zip ruby-nokogiri

Preparing a source directory

To start, you will need to use the

gem2debtool to create a package for thegepublibrary. Use the following command:gem2deb gepub --only-source-dir

This will create a source directory for creating a

gepubDebian package, but will not try to build it yet. Afterwards change into the package source directory.cd ruby-gepub-1.0.15

Refining the package source

Now you can edit the package metadata in the

debian/directory.Clean up the

debian/changelog. First of all, if you build these packages for personal use, and not to upload them to the Debian project itself, you can remove references to issues on the Debian bugtracker. The urgency seems to relate to the order that packages are installed. If your library is like mine, a high level application component and this is not a security update release, it seems plausible to have a very low urgency.-ruby-gepub (1.0.15-1) UNRELEASED; urgency=medium +ruby-gepub (1.0.15-1) UNRELEASED; urgency=low - * Initial release (Closes: #nnnn) + * Initial release -- Alexander E. Fischer <aef@example.net> Mon, 02 Jan 2023 12:58:37 +0100

debian/controlneeds to be updated in several places. Again, as this is not uploaded to Debian, there is no need to differentiate between maintainer and uploader. I also had to tweek the naming of the Debian package forrubyzipbecause against the usual convention, the Debian package for it is not calledruby-rubyzip. They chose the nameruby-zip, probably for reasons of a less redundant name appealing more to the human perspective.Also, references to Debian trackers can be removed again. I had no intention of running tests when building the package, so I also removed references to this.

Last but not least, it is a good idea to write a concise description for the package. And if the description of the source gem starts with an article, the Debian package linter complains. So in my case I rewrote it a bit.

@@ -1,20 +1,16 @@ Source: ruby-gepub Section: ruby Priority: optional -Maintainer: Debian Ruby Team <pkg-ruby-extras-maintainers@lists.alioth.debian.org> -Uploaders: Alexander E. Fischer <aef@example.net> +Maintainer: Alexander E. Fischer <aef@example.net> Build-Depends: debhelper-compat (= 13), gem2deb (>= 1), ruby, ruby-nokogiri (<< 2.0), ruby-nokogiri (>= 1.8.2), - ruby-rubyzip (<< 2.4), - ruby-rubyzip (>> 1.1.1) + ruby-zip (<< 2.4), + ruby-zip (>> 1.1.1) Standards-Version: 4.5.0 -Vcs-Git: https://salsa.debian.org/ruby-team/ruby-gepub.git -Vcs-Browser: https://salsa.debian.org/ruby-team/ruby-gepub Homepage: http://github.com/skoji/gepub -Testsuite: autopkgtest-pkg-ruby XS-Ruby-Versions: all Rules-Requires-Root: no @@ -24,5 +20,5 @@ XB-Ruby-Versions: ${ruby:Versions} Depends: ${misc:Depends}, ${ruby:Depends}, ${shlibs:Depends} -Description: a generic EPUB library for Ruby. +Description: gepub is a generic EPUB library for Ruby. gepub is a generic EPUB parser/generator. Generates and parse EPUB2 and EPUB3

To allow Debian tooling to understand the copyright and license of the

gepublibrary I adjusteddebian/copyright. With it I wanted to point out, that the library itself is released under a 3 clause BSD license, while my packaging code is not licensed at all. The Debian standard for machine readable license documents doesn’t seem to have an option for no license, so I just made that “None” attribute up, because at the time, I did not want to spend any time thinking about licensing. If you intend to provide your package and its source to a wider audience, or do more fancy things to build the package, it would probably make it more useful for the consumers to issue a permissive license for use and modification here.Upstream-Name: gepub Source: http://github.com/skoji/gepub Files: * -Copyright: <years> <put author's name and email here> - <years> <likewise for another author> -License: Expat (FIXME) +Copyright: 2010-2014 KOJIMA Satoshi +License: BSD-3-clause + Redistribution and use in source and binary forms, with or without + modification, are permitted provided that the following conditions are met: + . + * Redistributions of source code must retain the above copyright + notice, this list of conditions and the following disclaimer. + * Redistributions in binary form must reproduce the above copyright + notice, this list of conditions and the following disclaimer in the + documentation and/or other materials provided with the distribution. + * Neither the name of the <organization> nor the + names of its contributors may be used to endorse or promote products + derived from this software without specific prior written permission. + . + THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND + ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED + WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE + DISCLAIMED. IN NO EVENT SHALL <COPYRIGHT HOLDER> BE LIABLE FOR ANY + DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES + (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; + LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND + ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT + (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS + SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE. Files: debian/* Copyright: 2023 Alexander E. Fischer <aef@example.net> -License: Expat (FIXME) -Comment: The Debian packaging is licensed under the same terms as the source. - -License: Expat - Permission is hereby granted, free of charge, to any person obtaining a copy - of this software and associated documentation files (the "Software"), to deal - in the Software without restriction, including without limitation the rights - to use, copy, modify, merge, publish, distribute, sublicense, and/or sell - copies of the Software, and to permit persons to whom the Software is - furnished to do so, subject to the following conditions: - . - The above copyright notice and this permission notice shall be included in - all copies or substantial portions of the Software. - . - THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR - IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, - FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE - AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER - LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, - OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN - THE SOFTWARE. +License: None + All rights reserved

Some of the package source files might be deleted if you again don’t aim to upload the package into the Debian project:

rm debian/salsa-ci.yml rm debian/upstream/metadata rm debian/watch

Building the package

Now the

ruby-gepubDebian package is ready to be built with the following command:debuild

There might be benefits to consider using

pbuilderinstead ofdebuild, because that uses a more isolated and standardized building environment, so that the package sources are more likely to be buildable on systems other than your own. But I did not chose to investigate it this time.

-

-

03 Jul 2016

03 Jul 2016-

I just updated our Video Streaming App to Rails 5 and am very pleased with it.

The upgrade path had just a minor bump with some failing test cases due to an incompatibility with devise 4.1.1 and therefore switched to 4.2.0 which was released 4 hours ago.Action Cable now finally works like a charm, no need to manage the AR Connections manually anymore and also the bug with deadlocks when using multiple channels is gone.

Thinking back to the early days of Action Cable, it has come a long way and gives you an easy option to harness the power of websockets from within your Rails App.

Some years ago I was quite intrigued by the websocket support of Torquebox 3 and on paper it all seemed awesome. You get a nice abstraction layer using the STOMP protocol with Stilts and perfect integration with a Bridge for JMS with your backend messaging system HornetQ all out of the box on a JBoss AS. You would have everything nicely bundled in your rails monolith and just deloy it in Torquebox and you are done, compared to the alternatives at the time where you would deploy your rails app to Phusion Passenger and have to setup something seperate for websockets. In practice it was kind of a letdown, for example one issue I encountered was that it wasn’t really reliable, websockets would just close down and stuff like that.

Now with Action Cable it just works™. In the mentioned application we have a chat, updates of the current viewer count of a stream, the list of logged in users that watch it, and status changes all funneled through Action Cable backed by Redis with no hickups whatsoever.

-

-

15 Feb 2016

15 Feb 2016-

After upgrading an app to Rails 5.0.0.beta2 I started playing with Action Cable.

In this post I want to show how to do authorization in Cable Channels.

Whether you use CanCanCan, Pundit or whatever, first off you will have to authenticate the user, after that you can do your permission checks.How to do authentication is shown in the Action Cable Examples. Basically you are supposed to fetch the

user_idfrom the cookie. The example shows how to check if the user is signed in and if not reject the websocket connection.

If you need more granular checks, keep reading. -

To understand the following code you should first familiarize yourself with the basics of Action Cable, the Readme is a good start.

The goal here is to identify logged in users and do permission checks per message. One could also check permissions during initiation of the connection or the subscription of a channel, the most granular option is to verify permissions for each message. This can be beneficial if multiple types of messages or messages regarding different resources which require distinct permissions are delivered from the same queue.

Also imagine permissions change while a channel is subscribed, you would propably want to stop sending messages immediately if a user gets the permission to receive them revoked. -

In the ApplicationCable we define methods to get the user from the session and Cancancan’s

Abilitythrough which we can check permissions.module ApplicationCable class Connection < ActionCable::Connection::Base identified_by :current_user def connect self.current_user = find_verified_user end def session cookies.encrypted[Rails.application.config.session_options[:key]] end def ability @ability ||= Ability.new(current_user) end protected def find_verified_user User.find_by(id: session["user_id"]) end end end

We give

Channelaccess to the session and the ability object. The current user is already accessable throughcurrent_user.module ApplicationCable class Channel < ActionCable::Channel::Base delegate :session, :ability, to: :connection # dont allow the clients to call those methods protected :session, :ability end end

So far we setup everything we need to verify permissions in our own channels.

So now we can use the ability object to deny subscription in general, or in this case to filter which messages are sent.Notice: Currently using ActiveRecord from inside a stream callback depletes the connection pool. I reported this issue under #23778: ActionCable can deplete AR’s connection pool. Therefore we have to ensure the connection is checked back into the pool ourselfs.

class StreamUpdatesChannel < ApplicationCable::Channel def subscribed queue = "stream_updates:#{params[:stream_id]}" stream_from queue, -> (message) do ActiveRecord::Base.connection_pool.with_connection do if ability.can? :show, Stream.find(params[:stream_id]) transmit ActiveSupport::JSON.decode(message), via: queue end end end end end

-

-

-

A year ago we had an issue using Git from TeamCity “JSchException: Algorithm negotiation fail” due to

diffie-hellman-group-exchange-sha256wasn’t supported. (see Git connection fails due to unsupported key exchange algorithm on JetBrains issue tracker)Today we had a similar issue with using the TeamCity plugin for RubyMine.

Our TeamCity installation is served through a reverse proxy by an Apache web server. The only common algorithm between Java and our TLS configuration isTLS_DHE_RSA_WITH_AES_128_CBC_SHA.Due to Java’s JCE provider having a key size upper limit of 1024, since Java 8 it is 2048, the connection fails because we require at least 4096. In RubyMine you get the Message “Login error: Prime size must be multiple of 64, and can only range from 512 to 2048 (inclusive)”.

-

To fix this on a Debian “Jessie” 8 system with OpenJDK 8 installed follow these steps.

Install the Bouncy Castle Provider:

sudo aptitude install libbcprov-java

Link the JAR in your JRE:

sudo ln -s /usr/share/java/bcprov.jar /usr/lib/jvm/java-8-openjdk-amd64/jre/lib/ext/bcprov.jar

Modify the configuration

/etc/java-8-openjdk/security/java.securitysecurity.provider.2=org.bouncycastle.jce.provider.BouncyCastleProvider

-

-

-

We recently started experimenting with Ansible for automation of multi-step software installations on our servers. Quickly, we realized that Ansible version 1.7.2, which is available through Debian Jessie’s official repositories, doesn’t include all the features we needed. Sadly, the missing

recursiveoption for theaclmodule is first available in Ansible 2.0, which is not yet fully released. Official packages and Debian Testing packages were only available for Ansible version 1.9.4.In the end I decided to investigate how to manually build a Debian package. Here is what I found:

-

Acquire the source repository

At first, I cloned the official Git-repository from GitHub:

cd /usr/local/src git clone --recursive https://github.com/ansible/ansible.git

Then I checked-out the latest 2.0.0 RC tag:

cd ansible git checkout -b v2.0.0-0.7.rc2 tags/v2.0.0-0.7.rc2 git submodule update

-

Install build-dependencies

The

mk-build-depscommand is part of thedevscriptspackage. Thedebianmake task requiresasciidocto be installed. You can install these like this:sudo aptitude install asciidoc devscripts

To install the development packages necessary to build the Ansible package I generated a temporary package named

ansible-build-deps-depends. This temporary package states dependencies on all the packages that are necessary for building the actual Ansible package.make DEB_DIST=jessie debian mk-build-deps --root-cmd sudo --install --build-dep deb-build/jessie/ansible-2.0.0/debian/control sudo aptitude markauto asciidoc

-

Build the Ansible package

To generate the actual

.debfile, I issued the following command:make DEB_DIST=jessie deb

-

Install Ansible

And afterwards I installed the freshly built Ansible

.debpackage like this:sudo gdebi deb-build/jessie/ansible_2.0.0-0.git201512071813.cc98528.headsv20007rc2\~jessie_all.deb

In case you don’t have

gdebiinstalled, for example if you don’t have a desktop environment running, you can use the following more noisy alternative:sudo dpkg -i deb-build/jessie/ansible_2.0.0-0.git201512071813.cc98528.headsv20007rc2\~jessie_all.deb sudo apt-get install -f

-

Remove the build-dependencies again

After I built the Ansible package I just removed the temporary

ansible-build-deps-dependspackage again.APTwill then automatically remove all the development dependencies that it depends upon, leaving a clean system.sudo aptitude purge ansible-build-deps-depends

-

-

-

Lately I spent a lot of time exploring the details of GnuPG and the underlying OpenPGP standard. I found that there are many outdated guides and tutorials which still find their way into the hands of newcomers. There seems to be a cloud of confusion around the topic, which leads to many misinformed users, but also to the idea that OpenPGP is hard to understand.

This article is my attempt to fight some of this confusion and misinformation.

-

Naming confusion

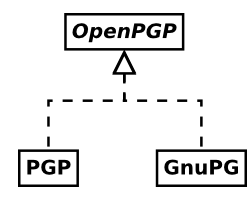

A lot of people seem to have problems separating the different terms.

- OpenPGP is a standard for managing cryptographic identities and related keys mostly described by RFC 4880. It also provides a framework for issuing and verification of digital signatures and for encrypting and decrypting of data using the aforementioned identities.

- PGP, meaning Pretty Good Privacy, was the first implementation of the system now standardized as OpenPGP. It is proprietary software currently owned an being developed by Symantec.

- GnuPG, the GNU Privacy Guard, is probably the most wide-spread free software implementation of the OpenPGP standard. Some lazy people also call it GPG because it’s executable is called

gpg. This confuses people even more.

-

“OpenPGP is just for e-mail”

It is true that OpenPGP was created to allow secure e-mail communication. But OpenPGP can do far more than that.

One major field of usage for OpenPGP is the secure distribution of software releases. Almost all of the big Linux distributions and lots of other software projects rely on GnuPG to verify that the downloaded packages are indeed identical to those made by the original authors.

OpenPGP can encrypt and digitally sign arbitrary files. Also, by using so called ASCII-armored messages, OpenPGP can be used to send encrypted and signed messages through every system that is able to relay multi-line text messages.

In addition, OpenPGP identity certificates can be used to authenticate to SSH servers. They can also be used to verify the identities of remote servers through Monkeysphere.

All in all, OpenPGP is a fully-fledged competitor to the X.509 certificate system used in SSL/TLS and S/MIME. Personally I think OpenPGP actually outperforms X.509 in any regard.

-

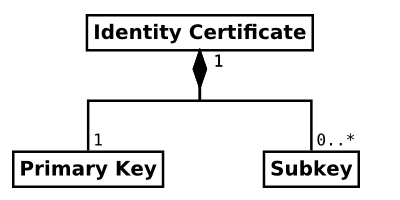

Certificates and keys

Far too many things in OpenPGP are called keys by many people. In OpenPGP, an identity is formed by one or more asymmetric crypto keys. Those keys are linked together by digital signatures. Also, there is a whole lot of other useful data contained within this structure.

A lot of times, I have seen that describing this whole bunch of different pieces of data “a key” just makes it harder for people to understand the system. Calling it an identity certificate describes it far better and allows people distinguish between it and the actual crypto keys within.

-

Fingerprints and other key identifiers

Each key in OpenPGP (of the current version 4) can be securely identified by a sequence of 160 bits, called a fingerprint. This sequence is usually represented by 40 hexadecimal characters to be easier to read and compare. OpenPGP identity certificates are identified by the fingerprints of their primary keys.

The fingerprint is designed in a way, so that it is currently considered infeasible to deliberately generate another certificate which has the same fingerprint. Behind the scenes this is achieved by using the cryptographic hash function SHA-1.

Versions of GnuPG prior to version 2.1 did not display the full fingerprint by default. Instead they displayed a so called key ID. Key IDs are excerpts of the end of fingerprint sequence. The short variant is the 8 hexadecimal characters, the long variant is 16 hexadecimal characters long.

Fingerprint: 0123456789ABCDEF0123456789ABCDEF01234567 Long key ID: 89ABCDEF01234567 Short key ID: 01234567

Even today, these key IDs are displayed prominently within GnuPG’s output and lots of OpenPGP related GUI programs and websites display them. They all fail to warn the user that neither the short, nor the long key ID can be used to securely identify a certificate, because they have been shown to be easily spoofable. Please don’t rely on these, or even better, avoid them completely and use full fingerprints instead.

-

Secure exchange of identity certificates

Probably the biggest obstacle of establishing secure communication through cryptography is making sure that both parties own a copy of each other’s public asymmetric key. If a malicious third party is able to provide both communication partners with fake keys, the whole cryptography can be circumvented by performing a MITM attack.

In OpenPGP, communication partners need to exchange copies of each others identity certificates prior to usage. To deny possible attackers, this needs to be done through a secure channel. Sadly, secure channels are very rare. One way could be to burn the certificates to CDs and exchange these at a personal meeting.

The certificates could also be uploaded to a file server and downloaded by both communication partners, provided that they verify the fingerprints of the certificates afterwards. The fingerprints still needs to be exchanged through a secure channel.

-

Key servers

Key servers are specialized file servers that allow anyone to publish OpenPGP certificates. Some key server networks continuously synchronize their contents, so you only need to upload your certificates to one of the network participants. Most key servers don’t allow to delete any content that has ever been uploaded to them, so make sure not to publish things you’d later regret.

Be aware that usually, key servers are not certificate authorities. Everyone can upload any certificates they like and usually, no verification is performed. There is no reason to ever assume the certificates received from a generic key server to be anyhow authentic. Just like with any other insecure channel, you have to compare the certificate’s fingerprints with a copy received through a secure channel.

Instead, key servers are a great way to receive updated information about known certificates. For example, if an OpenPGP certificate expires, it can be renewed by its owner and the update can then be published to the key servers again. Another important scenario would be a identity certificate that has been compromised. The owner can then publish a revokation certificate to the key servers to inform other people that the certificate is no longer safe to be used.

So key servers are less of an address book, rather than a mechanism for certificate updates. OpenPGP users are well advised to update certificates before each usage or on a regular interval.

-

-

-

Some time ago I acquired a BeagleBone Black, a hard-float ARM-based embedded mini PC, quite similar to the widely popular Raspberry Pi. Mainly I did this because I was disappointed in the Raspberry Pi for its need of non-free firmware to boot it up and because you had to rely on third-party maintained Linux distributions of questionable security maintenance for it to function.

Because some people gave me the impression that you could easily install an unmodified, official Debian operating system on it, I chose to take a look at the BeagleBone Black.

After tinkering a bit with the device, I realized that this is not true at all. There are some third-party maintained Debian-based distributions available, but at their peak of security awareness, they offer MD5 fingerprints from a non-HTTPS-accessible website for image validation. I’d rather not trust in that.When installing an official Debian Wheezy, the screen stays black. When using Jessie (testing) or Sid (unstable), the system seems to boot up correctly, but the USB host port malfunctions and I’m unable to attach a keyboard. Now while I was looking for a way to get the USB port to work, some people hinted to me, that it might be possible to fix this problem by changing some Linux kernel configuration parameters. Sadly I cannot say whether this actually works or not, because it seems to work only for boards of revision C and higher. My board, from the third-party producer element14 seems to be a revision B.

Still I would like to share with the world, how I managed to cross-compile the armmp kernel in Debian Sid with a slightly altered configuration on a

x86_64Debian Jessie system. -

Creating a clean Sid environment for building

First of all, I created a fresh building environment using

debootstrap:sudo debootstrap sid sid sudo chroot sid /bin/bash

Further instructions are what I did while being inside the

chrootenvironmentThen I added some decent package sources, and especially made sure there is a line for source packages. You might want to exchange the URL with some repository close to your location, the Debian CDN sometimes leads to strange situations in my experience.

cat <<FILE > /etc/apt/source.list deb http://cdn.debian.net/debian sid main deb-src http://cdn.debian.net/debian sid main FILE

Then I added the foreign armhf architecture to this environment, so I could acquire packages from that:

dpkg --add-architecture armhf apt-get update

Next, I installed basic building tools and the building dependencies for the Linux kernel itself:

apt-get install build-essential fakeroot gcc-arm-linux-gnueabihf libncurses5-dev apt-get build-dep linux

-

Configuring the Linux kernel package source

Still within the earlier created chroot environment I then prepared to build the actual package.

I reset the locale settings to have no dependency on actual installed locale definitions:

export LANGUAGE=C export LANG=C export LC_ALL=C unset LC_PAPER LC_ADDRESS LC_MONETARY LC_NUMERIC LC_TELEPHONE LC_MESSAGES LC_COLLATE LC_IDENTIFICATION LC_MEASUREMENT LC_CTYPE LC_TIME LC_NAME

I then acquired the kernel source code:

cd /tmp apt-get source linux cd linux-3.16.7-ckt2

I configured the name prefix for the cross-compiler executable to be used:

export CROSS_COMPILE=arm-linux-gnueabihf-

Now in the file

debian/config/armhf/config.armmp, I changed the Linux kernel configuration. In my case I just did change the following line:CONFIG_TI_CPPI41=y

You might need completely different changes here.

-

Building the kernel package

Because for some reason this package expects the compiler executable to be

gcc-4.8and I couldn’t find out how to teach it otherwise, I just created a symlink to the cross-compiler:ln -s /usr/bin/arm-linux-gnueabihf-gcc /usr/local/bin/arm-linux-gnueabihf-gcc-4.8

Afterwards, the build process was started by the following command:

dpkg-buildpackage -j8 -aarmhf -B -d

The

-jflag defines the maximum amount of tasks that will be done in parallel. The optimal setting for fastest compilation should be the amount of CPU cores and/or hyper-threads you’ve got on your system.The

-dflag ignores if some dependencies aren’t installed. In my case, the process complained aboutpythonandgcc-4.8not being installed before, even though they actually were installed. I guess it meantpython:armhfandgcc-4.8:armhfthen, but installing these is not even possible on myx86_64system, even with multiarch enabled. So in the end I decided to ignore these dependencies and the compilation went fine by the looks of it.Now that compilation process takes quite a while and outputs a lot of

.deband.udebpackage into the/tmpdirectory. The actual kernel package I needed was namedlinux-image-3.16.0-4-armmp_3.16.7-ckt2-1_armhf.debin my case. -

Creating a bootable image using the new kernel

For this, I left the Sid chroot environment again. I guess you don’t even have to.

Now I used the vmdebootstrap tool to create an image that can then be put onto an SD card.

First of all I had to install the tool from the experimental repositories, because the versions in Sid and Jessie were bugged somehow. That might not be needed anymore in the future.

So I added the experimental repository to the package management system:

cat <<FILE > /etc/apt/sources.list.d/experimental.list deb http://ftp.debian.org/debian experimental main contrib non-free deb-src http://ftp.debian.org/debian experimental main contrib non-free FILE

And afterwards I did the installation:

apt-get update apt-get -t experimental vmdebootstrap

I created a script named

customise.shthat sets the bootloader up inside the image, with the following content (many thanks to Neil Williams):#!/bin/sh set -e rootdir=$1 # copy u-boot to the boot partition cp $rootdir/usr/lib/u-boot/am335x_boneblack/MLO $rootdir/boot/MLO cp $rootdir/usr/lib/u-boot/am335x_boneblack/u-boot.img $rootdir/boot/u-boot.img # Setup uEnv.txt kernelVersion=$(basename `dirname $rootdir/usr/lib/*/am335x-boneblack.dtb`) version=$(echo $kernelVersion | sed 's/linux-image-\(.*\)/\1/') initRd=initrd.img-$version vmlinuz=vmlinuz-$version # uEnv.txt for Beaglebone # based on https://github.com/beagleboard/image-builder/blob/master/target/boot/beagleboard.org.txt cat >> $rootdir/boot/uEnv.txt <<EOF mmcroot=/dev/mmcblk0p2 ro mmcrootfstype=ext4 rootwait fixrtc console=ttyO0,115200n8 kernel_file=$vmlinuz initrd_file=$initRd loadaddr=0x80200000 initrd_addr=0x81000000 fdtaddr=0x80F80000 initrd_high=0xffffffff fdt_high=0xffffffff loadkernel=load mmc \${mmcdev}:\${mmcpart} \${loadaddr} \${kernel_file} loadinitrd=load mmc \${mmcdev}:\${mmcpart} \${initrd_addr} \${initrd_file}; setenv initrd_size \${filesize} loadfdt=load mmc \${mmcdev}:\${mmcpart} \${fdtaddr} /dtbs/\${fdtfile} loadfiles=run loadkernel; run loadinitrd; run loadfdt mmcargs=setenv bootargs console=tty0 console=\${console} root=\${mmcroot} rootfstype=\${mmcrootfstype} uenvcmd=run loadfiles; run mmcargs; bootz \${loadaddr} \${initrd_addr}:\${initrd_size} \${fdtaddr} EOF mkdir -p $rootdir/boot/dtbs cp $rootdir/usr/lib/linux-image-*-armmp/* $rootdir/boot/dtbsAfterwards the image was built using the following command:

Note that you might want to change the Debian mirror here as well.

sudo -H \ vmdebootstrap \ --owner `whoami` \ --log build.log \ --log-level debug \ --size 2G \ --image beaglebone-black.img \ --verbose \ --mirror http://cdn.debian.net/debian \ --arch armhf \ --distribution sid \ --bootsize 128m \ --boottype vfat \ --no-kernel \ --no-extlinux \ --foreign /usr/bin/qemu-arm-static \ --package u-boot \ --package linux-base \ --package initramfs-tools \ --custom-package [INSERT PATH TO YOUR SID CHROOT]/tmp/linux-image-3.16.0-4-armmp_3.16.7-ckt2-1_armhf.deb \ --enable-dhcp \ --configure-apt \ --serial-console-command '/sbin/getty -L ttyO0 115200 vt100' \ --customize ./customise.sh

The result is a file called beaglebone-black.img that can easily be put onto an SD card by using the

ddcommand. -

After I put that image on my SD card and booted it, it didn’t solve the USB problem, maybe it isn’t working at all, or maybe it just doesn’t work on my hardware revision. At least it did boot like a regular Sid image I tried before and now I have the knowledge to conduct further experiments.

It was a hell of a job to find out how to do it, involving tons of guides and howtos giving contradicting instructions and being outdated in different grades. In the end, what helped the most was talking to a lot of people on IRC.

So I hope this is helpful for someone else and should you be aware of a way to actually fix the USB host problem, please send me a comment.

-

-

-

As GodObject was affected by the OpenSSL Heartbleed bug, we used this as a chance to reevaluate our TLS setup and discovered a possibly dangerous oddity in how our preferred certificate authority StartSSL handles authentication to their account management web interface.

To acquire X.509 certificates which are trusted by a wide range of browsers and other communication partners we use the certificate authority service StartSSL (run by the Israel based company StartCom) for serveral years now. StartSSL is our choice because they are far less expensive than other CAs and at the same time offer very good customer support via Jabber (XMPP) instant messaging, even outside normal working hours.

When looking at our affected certificates which were issued by the StartSSL intermediate CA for servers of paying customers (class 2) I realized that their EKU extension tagging allows using them also on the client side of a TLS session. Typically this is a good thing because systems like SMTP or XMPP daemons act both in the role of client and server.

Now if you want to be customer of StartCom’s service you need to create an account. Using this account you can manage your personal details and credit card information, validate your domains and email addresses, request validations, request and revoke certificates and possibly more. Some of these actions are free and some cost money.

To manage this account you receive a free certificate signed by StartSSL intermediate CA for clients which is tagged to only be used as client in TLS through the EKU extension. This certificate is installed in your browser and is used for authentication in HTTPS.

Because in X.509 you normally can only trust a root CA and not only specific intermediate CAs I asked myself if maybe every client certificate issued by StartSSL can be used to manage the account. After our certificates had been revoked and replaced, I tested this theory with one of our new certificates and was actually able to log into my StartSSL account.

As far as I see it, this means every class 2 server certificate issued by StartSSL for which the private key leaked (for example through the OpenSSL Heartbleed bug), can not only be used to attack the affected server/service but also gives full access to the StartCom account which originally requested the certificate.

An attacker could for example revoke the customer’s original client certificate before the customer could revoke the leaked server certificate, and therefore buy more time to use the leaked certificate. Or as an denial of service, an attacker could just revoke the certificates of other servers associated with the account. Another attack could be spending the customers money using his StartSSL registered credit card for StartSSL services and the customer had the trouble proving it wasn’t him.

If I’m not totally mistaken here, this should be an even bigger reason to fix your affected systems and revoke your potentially leaked certificates. Also I would like to see StartCom working on disabling this as I honestly don’t see any argument for granting each server certificate full account control access.

-

-

05 Feb 2013

05 Feb 2013-

I just upgraded our continuous integration server to TeamCity 8 EAP.

I am really excited about the new functionality to group projects and create subprojects which will be introduced in 8.0. It will greatly help organizing related projects.If you never heard of TeamCity and are looking for a great CI server, I can only encourage you to give it a try. The reason for us to choose TeamCity was the great support for Ruby. It supports rvm, gemsets, bundler, rspec and integrates nicely in RubyMine through a plugin. It also has support for feature branches which is really great if you use Git with such a branching model.

-

-

15 May 2012

15 May 2012-

TorqueBox allows running distributed transactions over multiple databases and messaging queues. But what to do, if you also want to operate on the filesystem?

This can be done with XADisk.This post outlines the necessary steps to setup XADisk.

It will enable you to work on your filesystem and database in distributed transactions from within your Rails application. -

Notice

At the time of writing, this does not work out of the box.

There is an issue in IronJacamar which prevents deployment of XADisk as a resource adapter.Also the XADisk 1.2 Adapter does not comply with the JCA Spec.

Both issues are resolved and should be fixed in the next releases.To work around this, I had to modify the adapter. You can get it here.

-

Prerequisities

- TorqueBox 2.x installation. See TorqueBox Installation

- “TorqueBox ready” Rails application. See Preparing your Rails application

- XA capable database.

Verify that TorqueBox and your application work by executing the following tasks from your rails app.

rake torquebox:check rake torquebox:deploy rake torquebox:run

Your application should now be available at localhost:8080.

To enable full distributed transaction support in PostgreSQL, you’ll need to set max_prepared_transactions to something greater than zero in postgresql.conf, which is the usual default in most installations.

http://torquebox.org/documentation/2.0.1/transactions.html#d0e5250 -

Deploying the XADisk Resource Adapter

To deploy it, put XADisk.rar in torquebox/jboss/standalone/deployments.

Next the resource adapter needs to be configured.

Therefore open torquebox/jboss/configuration/standalone.xml.

Modify the resource adapters subsystem:<subsystem xmlns="urn:jboss:domain:resource-adapters:1.0"> <resource-adapters> <resource-adapter> <archive> XADisk.rar </archive> <transaction-support>XATransaction</transaction-support> <config-property name="xaDiskHome"> /opt/xadisk/xadisk1 </config-property> <config-property name="instanceId"> xadisk1 </config-property> <connection-definitions> <connection-definition class-name="org.xadisk.connector.outbound.XADiskManagedConnectionFactory" jndi-name="java:global/xadisk1" pool-name="XADiskConnectionFactoryPool"> <config-property name="instanceId"> xadisk1 </config-property> <xa-pool> <min-pool-size>1</min-pool-size> <max-pool-size>5</max-pool-size> </xa-pool> </connection-definition> </connection-definitions> </resource-adapter> </resource-adapters> </subsystem>

Make sure to modify the property “xaDiskHome” according to your system.

This is the working directory of the XADisk instance where it stores its transaction logs among other stuff. The instanceId is the name for this instance.

Also the JNDI name of the connection factory must be configured.Start TorqueBox. XADisk should now be successfully deployed.

The logs should state something similar to this:

01:30:55,552 INFO [org.jboss.as.server.deployment] (MSC service thread 1-5) JBAS015876: Starting deployment of "XADisk.rar" 01:30:56,090 INFO [org.jboss.as.deployment.connector] (MSC service thread 1-4) JBAS010406: Registered connection factory java:global/xadisk1 01:30:56,252 INFO [org.jboss.as.deployment.connector] (MSC service thread 1-1) JBAS010401: Bound JCA ConnectionFactory [java:global/xadisk1] 01:30:58,772 INFO [org.jboss.as.server] (DeploymentScanner-threads - 2) JBAS018559: Deployed "XADisk.rar"

-

Using the adapter in a Rails app

To use the resource adapter the connection factory has to be looked up via JNDI.

The JavaDoc of XADisk lists the supported operations.# wrap database and filesystem in one transaction TorqueBox.transaction do # lookup the xadisk connection factory factory = TorqueBox::Naming.context.lookup("java:global/xadisk1") # acquire a connection conn = factory.connection begin a = Article.create! :title => "test" file = java.io.File.new "/opt/filestore/#{a.id}" conn.create_file file ensure conn.close end end

More about distributed transactions in TorqueBox can be found here.

-